Computer vision is one of the areas of artificial intelligence that analyzes information obtained through various images and videos. Almost everyone uses various smartphones, laptops, and other devices equipped with cameras. It’s worth noting a large number of cameras and sensors located directly on the street or in various rooms. That is why the amount of information received is quite large.

According to CISCO research (conducted in 2015), by 2017, more than 80 of Internet traffic will come from video (this figure is even higher). That is why it is essential to process and analyze all the information coming to them efficiently and as soon as possible.

History

The vision was used both for protection and for food extraction. Certain species (predators) hunted their prey (herbivores), and the animals had to evolve to gain various advantages in this rivalry. Over time, the vision became the primary way of obtaining information. All this relates to biological vision, which was the impetus for the development of a large number of different biological species on earth but has little to do with computer vision.

It is believed that the first camera appeared in the Renaissance (circa 1600). It was based on the theory of pinhole cameras. With the development of technology, cameras appeared almost everywhere (the most common sensor in the world). The impetus for the development of computer vision was the work of Hubel and Wiesel done in the 50s and 60s using electrophysiology. One of the first works on computer vision is a series of works, “Block World” (Larry Robertson). However, these were exceptionally bold and ambitious attempts that could not be implemented.

At the initial stage, it was pretty tricky (in most cases, simply impossible) to recognize an object, but it was possible to perform the segmentation of objects. This identification method made it possible to advance in solving the main tasks.

At the beginning of the new millennium, machine-learning methods rapidly gain momentum. In 2001, the AdaBoost algorithm was launched, which could recognize people’s faces in real-time, despite the slow speed of the chips of that time. In 2006, based on this algorithm, Fujifilm released the first camera that was equipped with a real-time face recognition detector.

In the early 2000s, a set of reference data (PASCAL Visual Object Challenge) appeared, allowing for measuring object recognition progress. However, most machine learning algorithms have a large dimension, a relatively abundant amount of source data, and many different parameters to fit, making them quite complex.

ImageNet

ImageNet is a large-scale visual recognition task. At the initial stage, more than 40 million different images were uploaded, which were later divided into specific categories. This volume of data is one of the largest in the field of artificial intelligence, which made it possible to transfer this process to a completely different level. At the initial stage, various errors occurred when recognizing objects. Still, over time, the number of recognition errors decreased significantly, which in the future gives us hope for their complete exclusion.

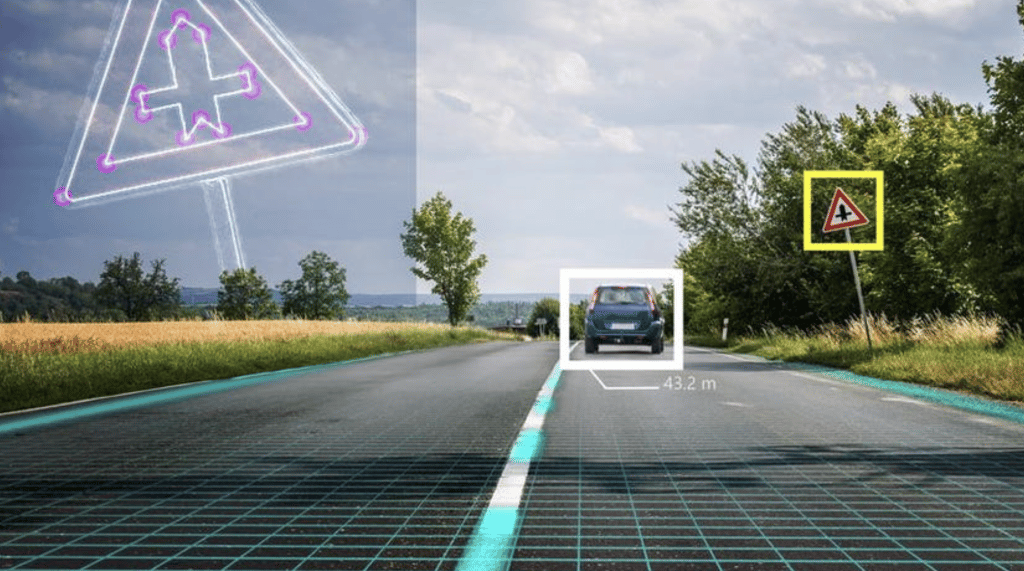

The main problem in computer vision is the classification of images. At the initial stage, the algorithm scans the picture, after which it classifies it according to certain objects represented in the database. The scope of application of this model can be extensive. You can identify dishes, animals, and similar goods, recognize various works of art, etc.

Modern problems in the field of computer vision

Object recognition in computer vision strives for perfection. Still, other tasks must be solved to improve computer vision, namely detecting all objects and subtitles for various images. This task is quite tricky, but it will allow you to go beyond the limited scope in the future.

The critical moment in solving this problem was the development of a seven-layer convolutional neural network, which is now known as AlexNet (previously called Supervisor). It was developed in 2012 by Jeff Hinton’s group in Toronto. Modern networks are getting deeper and deeper. Over time, the number of these layers has reached unthinkable levels (more than 200), but modern GPUs limit these capabilities because they cannot cope with this load. These networks were developed before AlexNet, but they were used in other areas where they were not so important and were also limited by the technical capabilities of that time. The amount of raw data has also increased, which is one of the critical elements of machine learning. More data allows you to work with larger capacity models and improve (train) these models to achieve ideal results. It is also worth noting that new programming languages have appeared, which provide a more comprehensive range of possibilities for solving particular tasks.

Conclusion

A person sees much more than just a particular picture, which is what computer vision strives for. Not just classify an object, its frames or actions, but see the complete picture. To perform these tasks, it is necessary to improve the current algorithms and solve many different problems (semantic segmentation or grouping of perception). At the same time, the solution to these problems and the development of modern technologies will significantly expand the possibilities of computer vision.

Computer vision is a highly fantastic field that is actively developing, thanks to machine learning and the improvement of convolutional neural networks, which will open up new opportunities for humanity. Progress in this industry will significantly increase the opportunities computer vision provides to us, which will dramatically increase production capacity in various fields.